A Guide to AI Governance

Defining , Safety, Trust, Responsibility, and Risk

As artificial intelligence transforms industries and reshapes our world, organizations face mounting pressure to deploy AI systems that are not only powerful but also ethical, safe, and aligned with human values. Yet the terminology around AI ethics can be confusing, with overlapping concepts often used interchangeably but with distinct meanings and implications.

In my conversations with business leaders, I've noticed considerable confusion about what constitutes AI governance versus safety, how trustworthy AI differs from responsible AI, and where risk management fits. This lack of clarity isn't just a semantic issue—it creates real challenges in implementing effective AI oversight frameworks and meeting emerging regulatory requirements.

In this article, I want to clarify and demystify these concepts, offering clear definitions and practical insights into how they interconnect.

AI Governance: The Framework for Ethical AI

AI governance encompasses the policies, oversight structures, and processes that ensure AI systems are developed and deployed responsibly and in compliance with laws and ethical principles. Think of governance as the overarching framework that enables and enforces all other aspects of ethical AI.

Effective AI governance includes:

Strategic oversight: Establishing committees or boards with direct accountability for AI initiatives and their outcomes

Policy development: Creating clear guidelines for AI development, testing, deployment, and monitoring

Compliance mechanisms: Ensuring adherence to relevant regulations and industry standards

Documentation standards: Maintaining records of AI system designs, training data, performance metrics, and risk assessments

Audit procedures: Regularly reviewing AI systems to verify they operate as intended and within ethical boundaries

Many organizations are now creating dedicated AI governance committees with representation from technical teams, legal, compliance, ethics specialists, and business leaders. These committees typically work to align AI initiatives with the organization's values and risk tolerance while ensuring regulatory compliance.

For example, a healthcare organization might establish governance structures that require all AI systems affecting patient care to undergo rigorous review processes, including clinical validation, bias testing, and privacy impact assessments before approval. The governance framework would define who has decision-making authority, what documentation is required, and how frequently systems need to be reassessed.

As AI regulation evolves globally—from the EU AI Act to industry-specific guidelines—governance frameworks provide the structure to adapt to these requirements systematically rather than reactively.

AI Safety: Ensuring Systems Operate Reliably as Intended

AI safety focuses on preventing harm or unintended consequences from AI systems, ensuring they operate reliably and as intended, especially in high-stakes environments. While governance provides the framework, safety encompasses the technical practices that make AI trustworthy.

AI safety has both broad and narrow interpretations:

In the broad sense, it covers all measures to make AI beneficial and non-harmful

In the narrower "technical AI safety" sense, it focuses on the mathematical and engineering approaches to ensuring AI systems behave predictably and safely

Key aspects of technical AI safety include:

Robustness: Ensuring AI systems perform consistently even with unexpected inputs or in adversarial conditions

Interpretability: Making AI decision-making transparent and understandable to human overseers

Alignment: Designing systems whose objectives and behaviors align with human values and intentions

Safety practices are particularly critical in high-consequence applications. For instance, autonomous vehicle systems incorporate redundant safety measures, extensive testing across diverse scenarios, and fail-safe mechanisms prioritizing human safety above all other objectives.

In domains like healthcare, AI safety might involve rigorous validation against diverse patient populations, monitoring for performance drift as patient demographics change, and human-in-the-loop verification for high-risk decisions.

As AI systems grow more powerful, the safety challenges evolve. Current research focuses on ensuring that advanced AI systems remain reliably aligned with human intent even as they become more sophisticated and capable of reasoning about their operation.

Trustworthy AI: Worthy of Stakeholder Confidence

Trustworthy AI is not a single technique but an outcome—AI systems that merit stakeholder trust by operating transparently, fairly, reliably, and accountably. While safety focuses on preventing harm, trustworthiness encompasses the broader qualities that make AI systems deserving user confidence.

Components of trustworthy AI include:

Explainability: Providing clear information on how AI makes decisions, mainly when those decisions affect people's lives or livelihoods

Fairness: Mitigating biases to ensure equitable treatment across demographic groups

Reliability: Performing consistently across circumstances and over time

Accountability: Clearly defining responsibility for AI outcomes and providing redress mechanisms

Human-centricity: Respecting human autonomy and enhancing human capabilities rather than diminishing human agency

The White House's AI Bill of Rights illustrates key principles for trustworthy AI, including protections against algorithmic discrimination, safeguards for data privacy, and notice/explanation requirements to ensure that AI systems uphold civil rights and liberties.

In practical terms, building trustworthy AI often involves:

Diverse and representative training data

Regular bias audits and remediations

Clear communications about AI capabilities and limitations

Maintaining human oversight, especially for consequential decisions

Establishing appeals processes for affected individuals

For example, a financial institution deploying an AI-based loan approval system would need to ensure the system produces explainable decisions, doesn't perpetuate historical biases, maintains stable performance across different applicant groups, and allows for human review of edge cases—all while complying with fair lending regulations.

Trustworthy AI becomes increasingly important as AI systems are deployed in sensitive contexts. When systems make or influence decisions about healthcare, employment, or legal outcomes, stakeholder trust is desirable and essential for adoption and legitimacy.

Responsible AI: The Ethical Approach to Development and Deployment

Responsible AI represents a broad commitment to the ethical development and use of AI technologies. While trustworthy AI describes the systems' qualities, responsible AI encompasses the organizational culture, practices, and values that guide AI creation and deployment.

Responsible AI typically involves:

Ethical principles: Clear articulation of values that guide AI development and use

Inclusive design: Involving diverse stakeholders in AI system design and evaluation

Impact assessment: Evaluating the potential consequences of AI deployments on various stakeholders

Training and awareness: Educating teams about ethical considerations in AI

Continuous improvement: Regularly reassessing and refining approaches based on outcomes and stakeholder feedback

Many organizations have published responsible AI principles, but implementation requires converting high-level values into concrete practices. This means developing comprehensive governance structures, detailed policies, and targeted training programs that institutionalize ethical considerations throughout the AI lifecycle.

For example, Microsoft's Responsible AI Standard outlines requirements across six dimensions: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. These principles are operationalized through assessment tools, review processes, and dedicated roles responsible for ethical oversight.

The maturity of responsible AI practices varies widely across organizations. Some are beginning to articulate principles, while others have developed sophisticated frameworks with clear metrics for measuring compliance and effectiveness.

Responsible AI practices must evolve as AI capabilities advance to address new challenges. Issues such as synthetic media (deepfakes), increasingly human-like AI interactions, and autonomous systems require ongoing ethical consideration and the adaptation of responsible AI frameworks.

AI Risk Management: Proactively Addressing Potential Harms

AI risk management involves systematically identifying, assessing, and mitigating the various risks AI systems pose. While the previous concepts focus on building positive qualities, risk management targets potential adverse outcomes.

AI risks span multiple dimensions:

Technical risks: Algorithm errors, security vulnerabilities, unpredictable behaviors

Ethical risks: Bias, fairness issues, privacy violations

Legal and compliance risks: Regulatory violations, liability concerns

Reputational risks: Public backlash, loss of stakeholder trust

Operational risks: System failures, performance degradation, misalignment with business objectives

Formal frameworks like the NIST AI Risk Management Framework provide structured approaches to managing these risks throughout the AI lifecycle. The NIST framework, for instance, encompasses four core functions:

Map: Establish context for AI risks

Measure: Assess and analyze risks

Manage: Implement risk mitigation measures

Govern: Oversee and coordinate risk management activities

In practice, AI risk management might include conducting bias and privacy impact assessments before deployment, monitoring AI outcomes for anomalies or unexpected behaviors, and developing incident response plans for potential AI failures.

The new ISO/IEC 23894 standard similarly guides managing AI-related risks, helping organizations identify, assess, and mitigate such risks. These frameworks emphasize continuous risk assessment rather than point-in-time evaluations.

For example, a retail company deploying an AI recommendation system might assess risks, including algorithmic bias that could alienate customer segments, privacy concerns around data collection and use, and technical risks, such as recommendation quality degradation when product catalogs change significantly.

As AI systems become more complex and autonomous, risk management approaches must evolve to address emergent behaviors and long-term impacts that may not be immediately apparent during development.

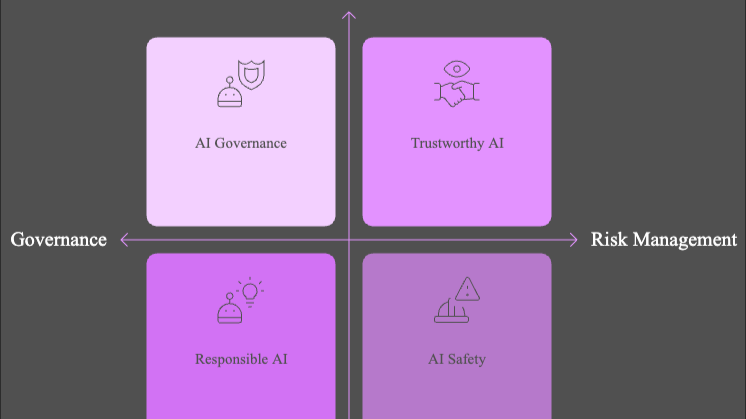

How These Concepts Interconnect

These five concepts—governance, safety, trustworthiness, responsibility, and risk management—form an integrated approach to ethical AI. Rather than standalone initiatives, they represent complementary facets of a comprehensive AI ethics framework:

AI governance provides the structural backbone—the policies, roles, and processes that enable all other aspects

AI safety focuses on the technical robustness and reliability of systems

Trustworthy AI encompasses the qualities that make AI systems worthy of stakeholder confidence

Responsible AI represents the organizational values and practices guiding ethical development

AI risk management proactively identifies and addresses potential harms

In practice, these elements work together throughout the AI lifecycle. Governance structures ensure safety practices, risk management identifies potential trustworthiness issues, and responsible AI principles guide governance priorities.

For instance, when developing a new AI system, an organization might:

Apply governance processes to determine required approvals and assessments

Implement safety practices during development and testing

Evaluate the system against trustworthiness criteria

Ensure responsible AI principles guide design choices

Conduct risk assessments to identify and mitigate potential harms

The Path Forward: Building Integrated Approaches

As AI continues transforming industries and society, organizations need integrated approaches that address all dimensions of ethical AI. Here are key considerations for building such approaches:

Align with organizational values: Effective AI ethics frameworks must reflect and reinforce the organization's core values.

Balance innovation and safeguards: Overly restrictive approaches may hamper innovation, while insufficient guardrails create unacceptable risks.

Embrace continuous evolution: Ethics frameworks must adapt as AI technologies and societal expectations evolve.

Foster cross-functional collaboration: Effective AI ethics requires input from technical teams, legal/compliance, ethics specialists, business leaders, and diverse stakeholders.

Prioritize based on risk: Apply the most rigorous oversight to high-risk applications while enabling streamlined approaches for lower-risk use cases.

Moving Forward

Understanding the distinctions between AI governance, safety, trustworthiness, responsibility, and risk management is essential for organizations navigating the complex landscape of AI ethics. Though interrelated, these concepts serve different functions in ensuring that AI systems benefit humanity while minimizing potential harm.

As regulatory requirements evolve and stakeholder expectations rise, organizations that develop integrated approaches to AI ethics will be better positioned to build trustworthy systems, manage risks effectively, and responsibly realize AI's transformative potential.

The future of AI depends not just on technical innovation but on our ability to develop and deploy these powerful technologies in ways that align with human values and promote human flourishing. By embracing comprehensive approaches to AI ethics, we can help ensure the future becomes one we all want to inhabit.