The 7 Stages of AI Governance Maturity

A Roadmap for Organizations

As artificial intelligence transforms business operations across industries, organizations face the critical challenge of implementing effective AI governance. AI systems can lead to ethical breaches, compliance violations, and reputational damage without proper oversight. But where should you begin, and how do you know if your governance efforts are enough?

This maturity model provides a clear roadmap for organizations at any stage of their AI governance journey. Whether you're just starting to consider AI risks or leading your industry in responsible AI practices, understanding your current maturity level enables targeted improvements and strategic planning. By progressing through these seven stages, organizations can not only mitigate risks but ultimately transform AI governance from a compliance burden into a competitive advantage.

This maturity model provides a clear roadmap for organizations at any stage of their AI governance journey. Whether you're just starting to consider AI risks or leading your industry in responsible AI practices, understanding your current maturity level enables targeted improvements and strategic planning. By progressing through these seven stages, organizations can mitigate risks and ultimately transform AI governance from a compliance burden into a competitive advantage.

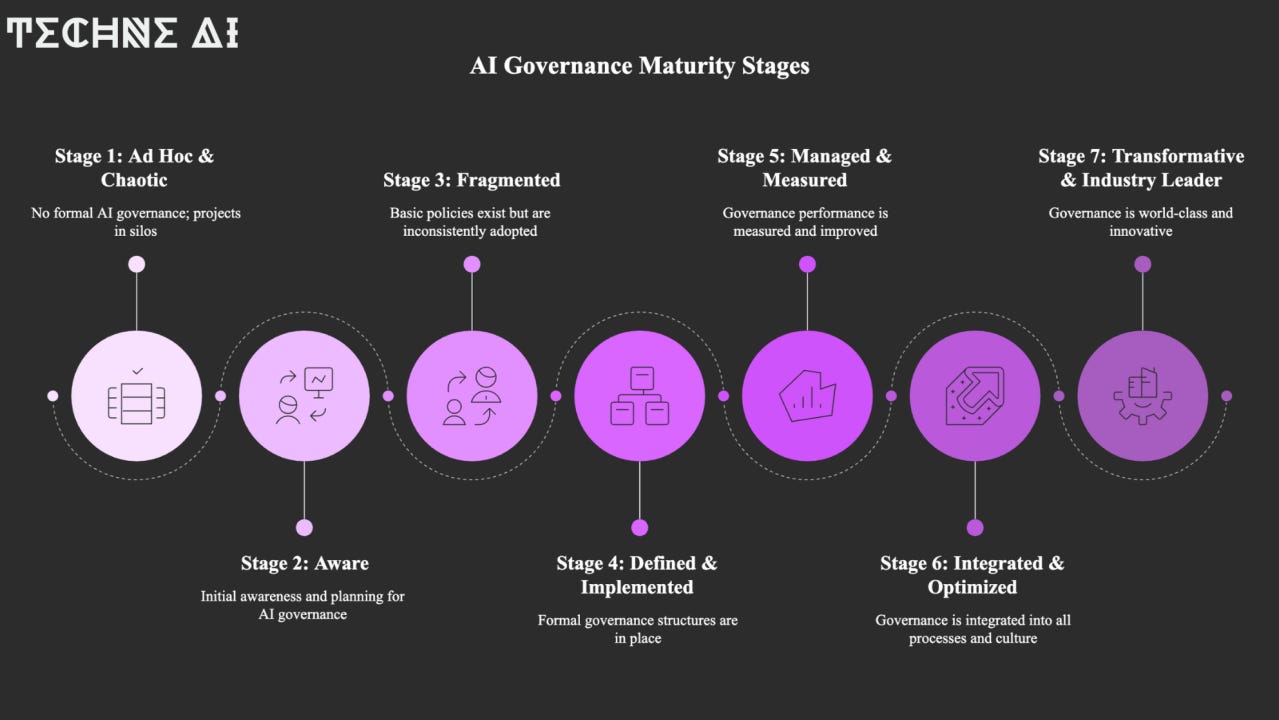

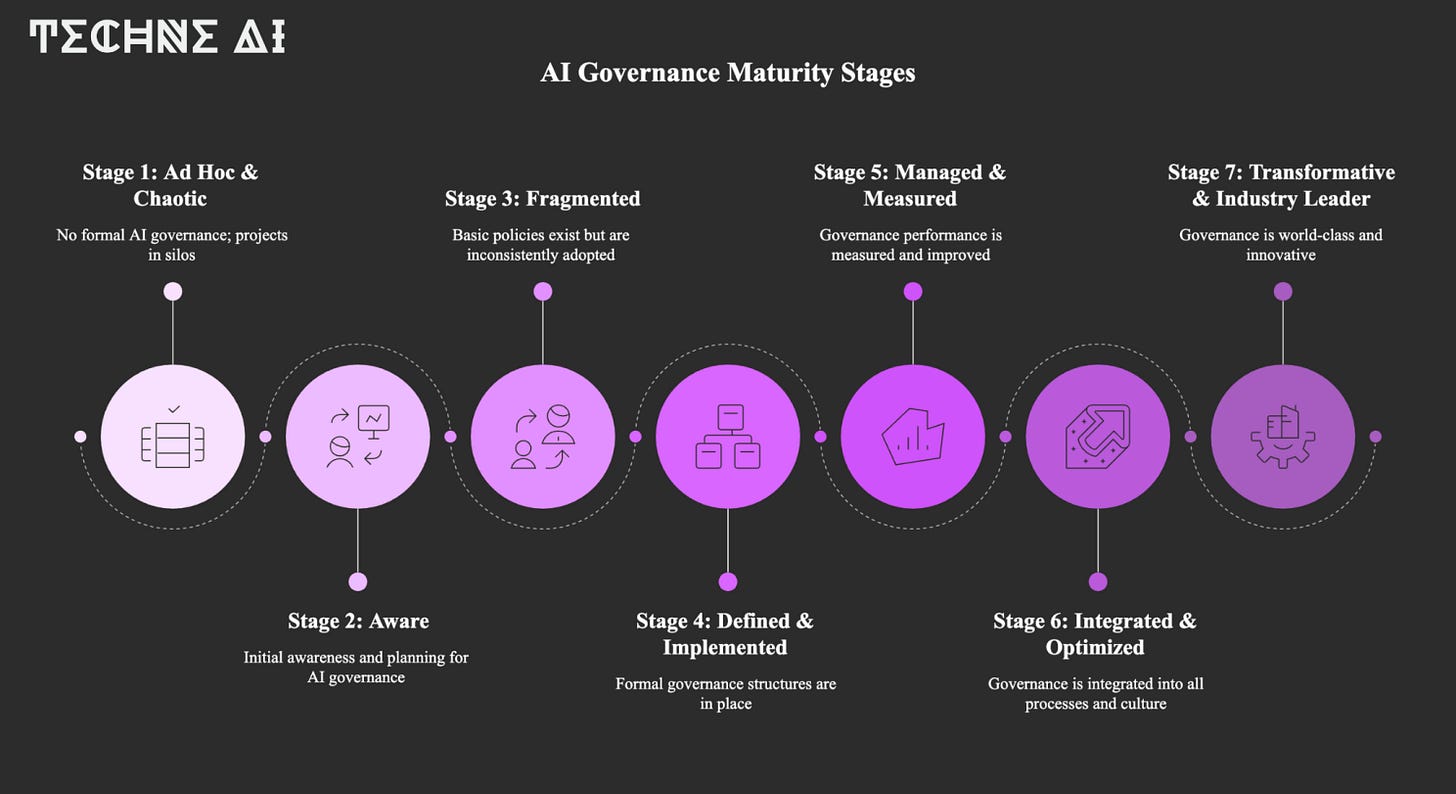

Stage 1: Ad Hoc & Chaotic

The organization has no formal AI governance. AI projects are done in silos with little oversight. Decisions about AI ethics or risk are left to individual developers or teams, often inconsistently. There might not even be awareness of AI-specific risks at leadership levels. Assessment: No dedicated AI policies or roles exist. Challenge: Lack of awareness and coordination leads to potential ethical breaches or compliance violations going unnoticed. Best Practice: Begin raising awareness – for example, conduct a basic AI risk workshop or designate someone to inventory AI projects.

Stage 2: Aware (Initial Awareness & Planning)

The organization has recognized the need for AI governance and is in planning mode. Some discussions or working groups form to address AI ethics or compliance. Policies are rudimentary or in draft. There may be a champion (like a concerned manager or an innovation officer) pushing for governance. Assessment: Existence of an initial AI governance framework document or the formation of an AI ethics committee, even if it has no power yet. Challenge: Moving from talk to action – people agree it’s important but may not know how to implement changes or fear stifling innovation. Best Practice: Develop a roadmap for governance (e.g. plan to publish an AI ethics policy, assign roles, pilot some governance procedures in one project).

Stage 3: Fragmented (Basic Policies, Inconsistent Adoption)

At this stage, basic AI governance policies or guidelines have been defined (e.g. a Responsible AI guideline), but adoption is spotty. Some teams comply, others don’t. There might be a few processes like AI project review for obvious issues, but not enterprise-wide. Assessment: Policies exist on paper; perhaps training has been offered. Some projects have been governed (maybe high-profile ones), but many smaller ones slip through unmanaged. Challenge: Enforcement and coverage – the governance is not ingrained in culture yet. People may view it as a box-ticking exercise. Best Practice: Start enforcing policies by integrating them into project lifecycle (e.g. require a compliance sign-off before deployment). Also, communicate success stories where governance averted a problem to show value.

Stage 4: Defined & Implemented – Formal AI governance structures are in place and functioning.

There is likely a central committee or officer for AI oversight. Policies have been refined and clearly communicated. Most AI projects go through required steps (like risk assessment, bias testing, approval gates). AI governance is part of the organization’s standard operating procedures, similar to quality or security management. Assessment: High percentage of AI initiatives following the governance process, existence of governance artifacts (risk assessments, model cards) for each project. Possibly obtaining external certification (e.g. aiming for ISO 42001 compliance). Challenge: Scaling the process without slowing down innovation too much – ensuring that governance keeps up with the number of projects and doesn’t become a bottleneck. Best Practice: Use tools and templates to streamline governance (checklists, assessment questionnaires). Also, ensure leadership support by having periodic reviews of AI governance at exec level, so everyone knows it’s serious.

Stage 5: Managed & Measured

The organization now measures its AI governance performance and continuously improves it. Governance has dedicated resources (e.g. a Responsible AI team). Metrics might include number of projects reviewed, incidents detected, training completion rates, etc. AI governance integration with enterprise risk management is achieved – AI risks are on the corporate risk register and monitored. Assessment: Quantitative metrics and audits show compliance rates and risk levels. The organization can demonstrate, for example, that 100% of high-risk AI systems underwent bias audit, and incident rate of AI issues is decreasing. Challenge: Avoiding complacency – at this stage it’s easy to think “we have it under control,” while the AI landscape evolves. Also managing complexity as AI governance now intersects with data governance, model risk management, etc., requiring coordination. Best Practice: Establish feedback loops – after each project or audit, have a retrospective to update governance practices. Benchmark against peers or standards (maybe participate in an industry consortium to compare notes). Possibly adopt advanced software solutions for AI governance to manage documentation and approvals (Governance, Risk, Compliance tools adapted for AI).

Stage 6: Integrated & Optimized

AI governance is fully integrated into all business processes and culture. It’s not a separate or burdensome thing – it is how the company does AI. Employees are proactive in raising issues; governance considerations are part of innovation discussions from the start (ethics by design). The organization might be certified or externally validated for its governance (e.g. ISO 42001 certified, or rated highly in ESG evaluations for AI ethics). Assessment: AI governance considerations appear in strategic planning, product development, and Board oversight regularly. External audits find minimal issues and praise internal processes. Challenge: At this maturity, challenges include staying adaptive (the external world may impose new requirements) and ensuring that the governance model itself innovates (for example, incorporating new tools like AI explainability improvements or addressing novel AI tech like generative models quickly). Best Practice: Regularly review the governance framework against new advancements and update it. Engage with external stakeholders – like publishing your governance approach publicly and inviting feedback or contributing to industry standards. This keeps the program fresh and maintains external trust.

Stage 7: Transformative & Industry Leader

The organization’s world-class AI governance gives it a competitive and reputational edge. It not only manages risks but innovates through governance. The company might help shape regulations and standards because it’s ahead of the curve. AI governance at this level can enable things like entering new markets quickly because regulators trust the company’s processes. The company might release responsible AI tools or frameworks for others (thought leadership). Assessment: The organization is cited as a model for Responsible AI in its industry. Zero major incidents in recent history and strong trust from customers and regulators. Possibly, the company sits on regulatory advisory boards or standard bodies. Challenge: Continuous leadership – maintaining this position requires effort and resources. Also, sharing practices might diminish competitive advantage, but leading firms realize that raising the industry standard is beneficial overall. Best Practice: Embrace transparency – publish AI ethics reports and open source specific tools (for fairness and explainability). Mentor other organizations or subsidiaries in adopting similar governance. At this stage, governance is not seen as a cost, but as an enabler of bold AI-driven strategies because stakeholders’ trust lowers barriers.

Key Takeaways

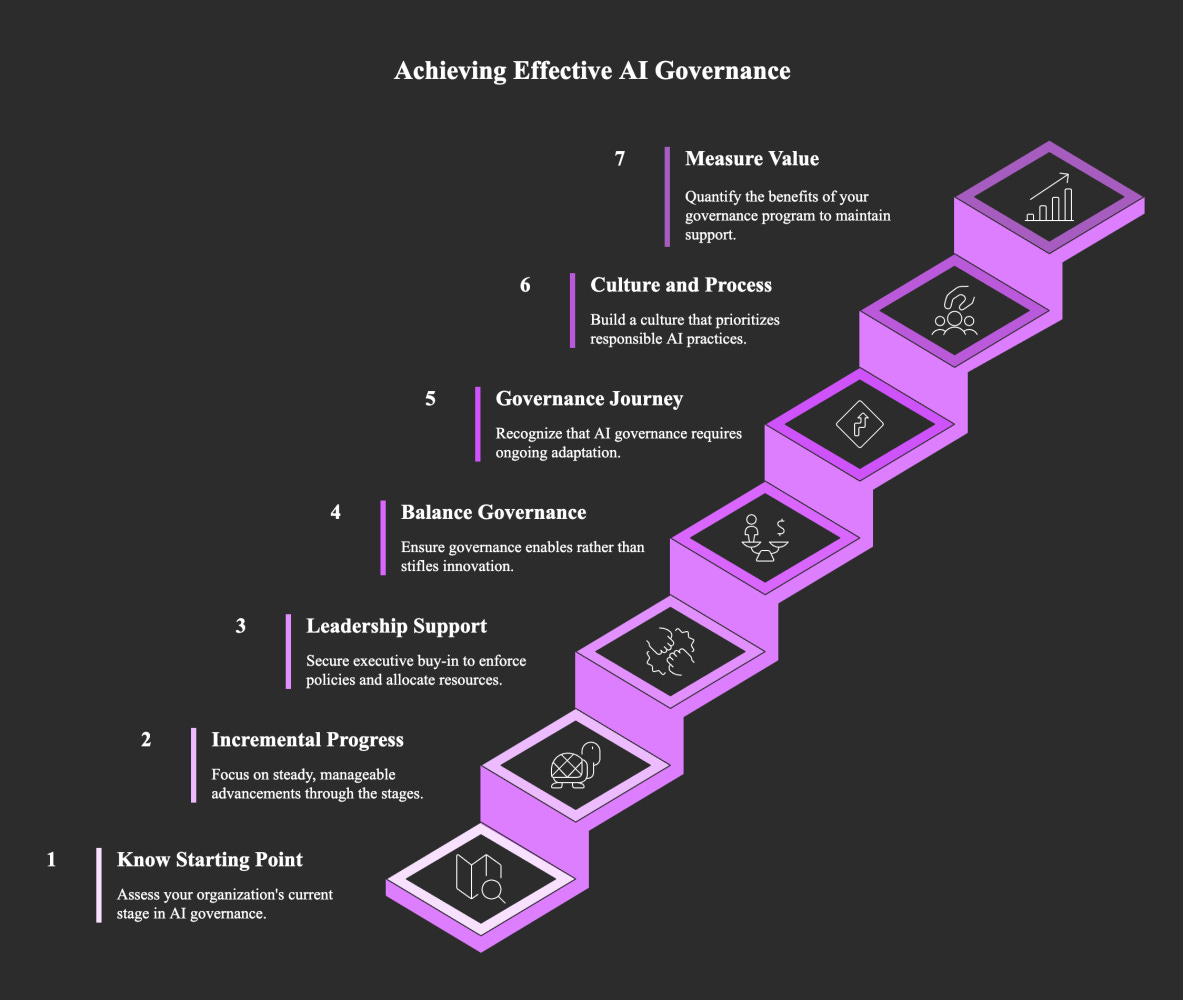

Know Your Starting Point: Assess your organization honestly against these seven stages. Most organizations begin at Stage 1 or 2; acknowledging your current position is the first step toward improvement.

Incremental Progress is Valuable: Moving from one stage to the next brings significant benefits. Don't try to jump from Stage 1 to Stage 6 overnight – focus on steady progress.

Leadership Support is Critical: AI governance requires executive buy-in at every stage. Without leadership support, policies remain unenforced and resources inadequate.

Balance Governance and Innovation: Effective AI governance doesn't stifle innovation – it enables responsible innovation by building trust with stakeholders and preventing costly mistakes.

Governance is a Journey, Not a Destination: The AI landscape continues to evolve rapidly with new technologies, regulations, and ethical considerations. Even organizations at Stage 7 must continuously adapt their governance approaches.

Culture Matters as Much as Process: As you progress through the stages, focus not just on policies and procedures but on building a culture where responsible AI is everyone's concern.

Measure and Demonstrate Value: Quantify the benefits of your governance program – incidents prevented, compliance achieved, trust built – to maintain momentum and support.

Organizations can use this AI Governance maturity model to pinpoint their current status (e.g., maybe Stage 3 if they have some policies but inconsistent application) and plan targeted improvements to progress (e.g., to Stage 4 by formalizing a governance committee and mandating processes across all projects).